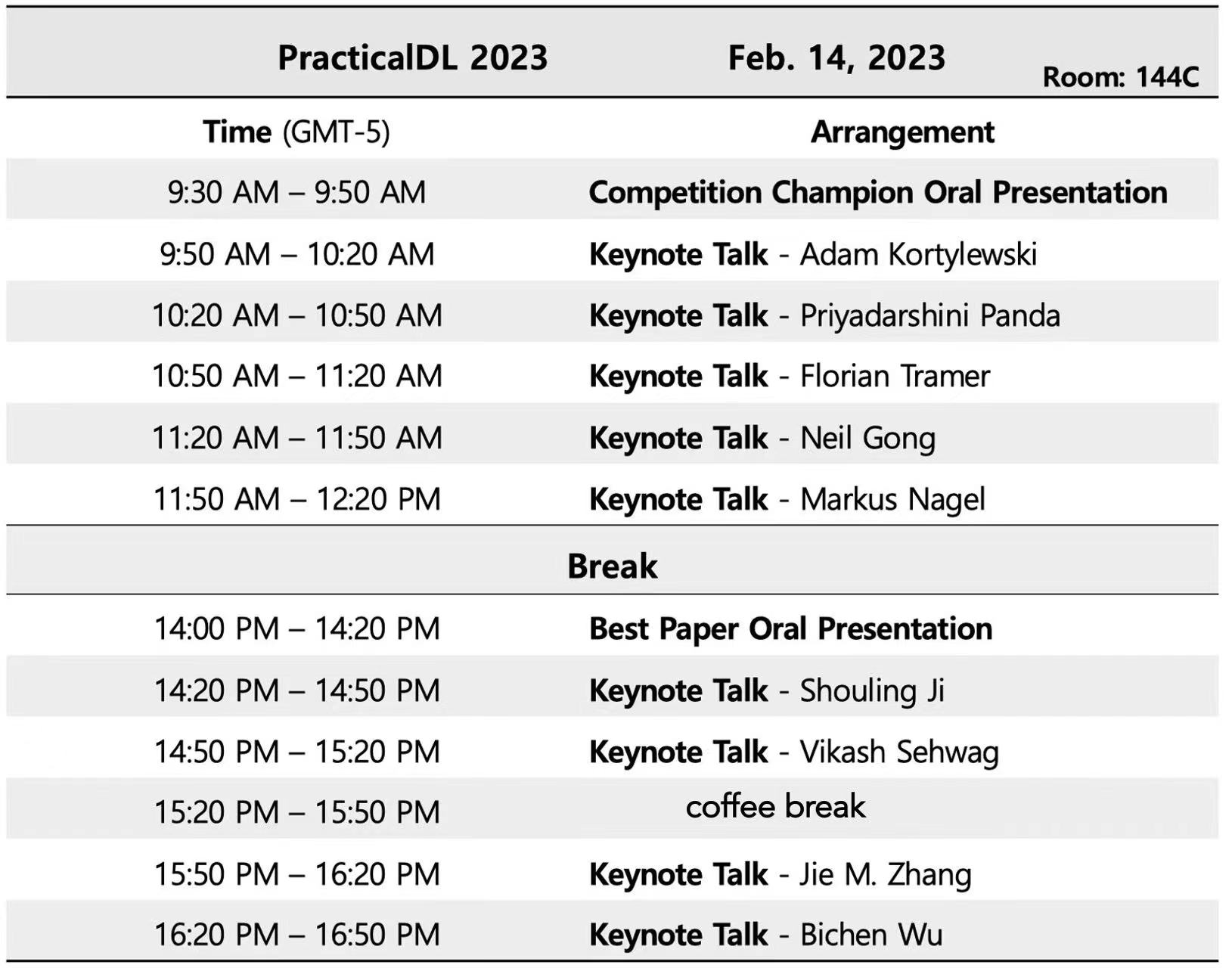

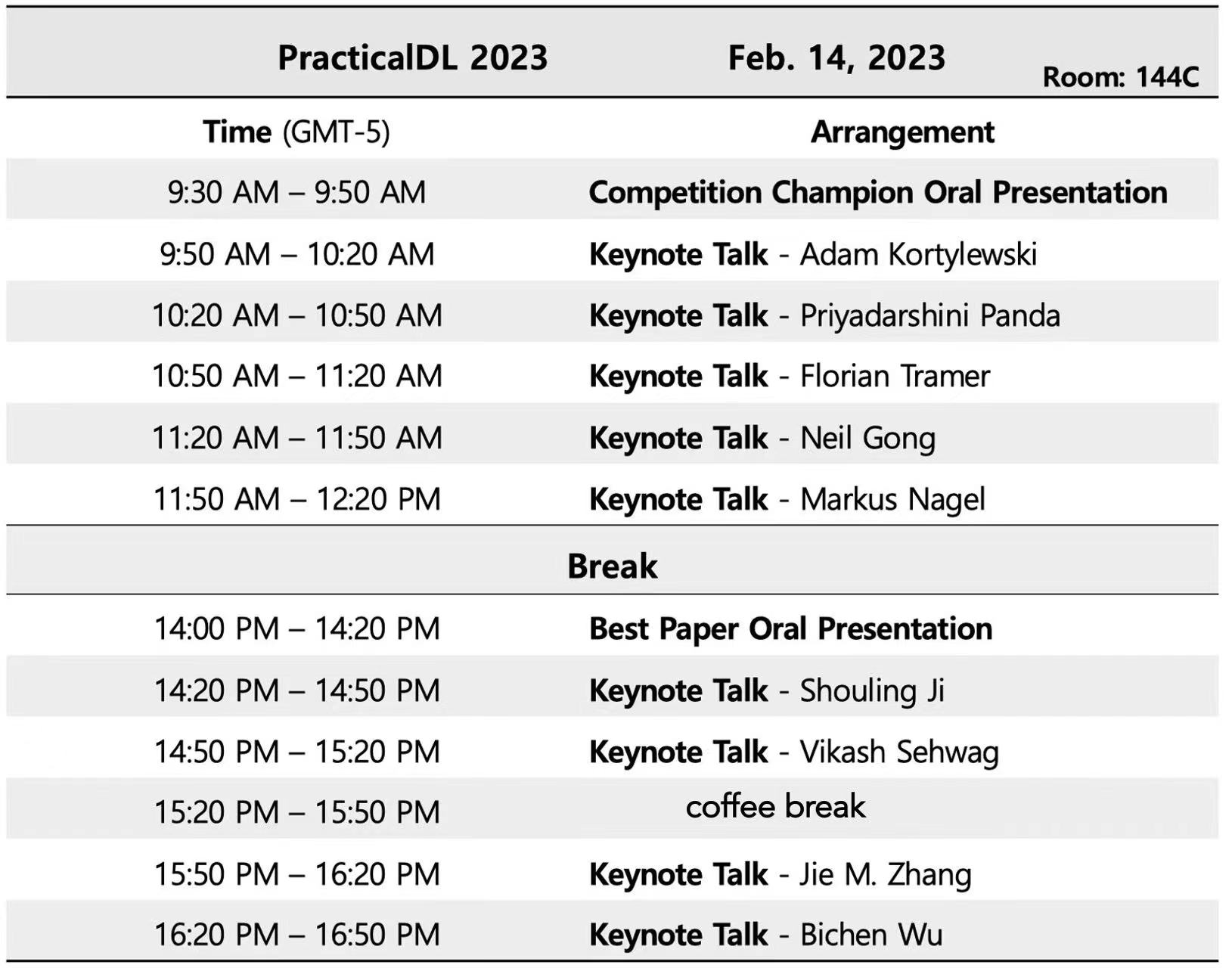

Workshop Schedule

|

Adam Kortylewski |

|

|

Priyadarshini Panda |

|

|

Florian Tramer |

|

|

Neil Gong |

|

|

Markus Nagel |

|

|

Jie M. Zhang |

King's College London |

|

Vikash Sehwag |

Princeton University |

|

Shouling Ji |

Zhejiang University |

|

Bichen Wu |

Meta |

|

Haotong Qin |

|

|

Ruihao Gong |

|

|

Jiakai Wang |

|

|

Siyuan Liang |

|

|

Zeyu Sun |

|

|

Aishan Liu |

Beihang University |

|

Wenbo Zhou |

University of Science and Technology of China |

|

Shanghang Zhang |

Peking University |

|

Fisher Yu |

ETH Zürich |

|

Xianglong Liu |

Beihang University |

1st International Workshop on Practical Deep Learning in the Wild @ AAAI 2022